NORC at the University of Chicago

How to Write an Evaluation

Plan

1

• Understand what an evaluation plan is and the purpose of

developing one

• Identify key sections of an evaluation plan

• Understand what information to include in an evaluation plan

Learning Objectives

2

• Details the program model being evaluated

• Describes and justifies the evaluation approach selected

• Provides instructions for the evaluation / a guide for each step

of

t

he evaluation process

What is an Evaluation Plan?

3

• Helps decide what information is needed to address the

evaluation objectives

• Helps identify methods for getting the needed information

• Helps determine a reasonable and realistic timeline for the

ev

a

luation

• Creates a shared understanding between stakeholders (e.g.,

t

he g

rantee staff, evaluator, AmeriCorps staff)

Purpose of an Evaluation Plan

4

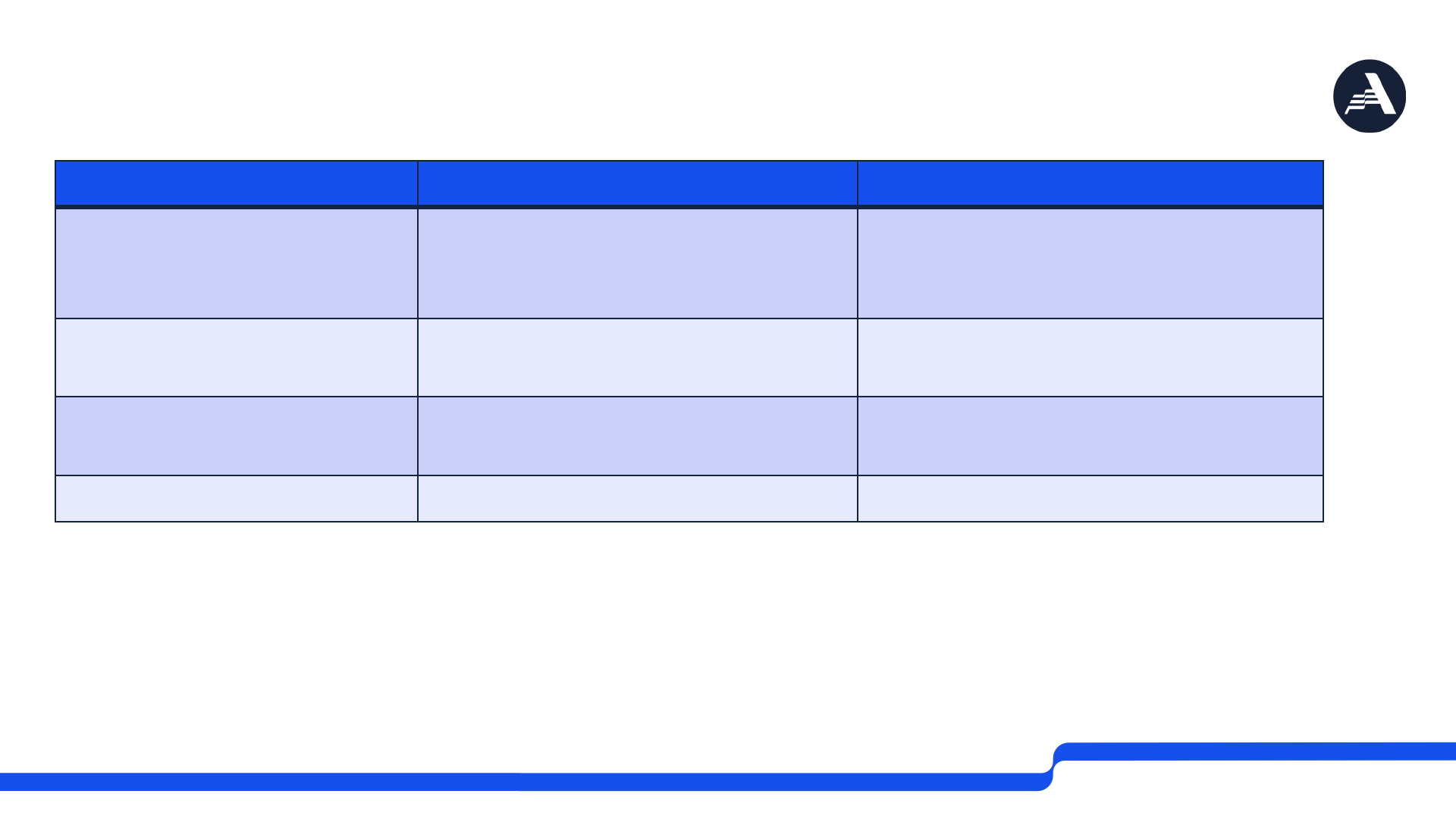

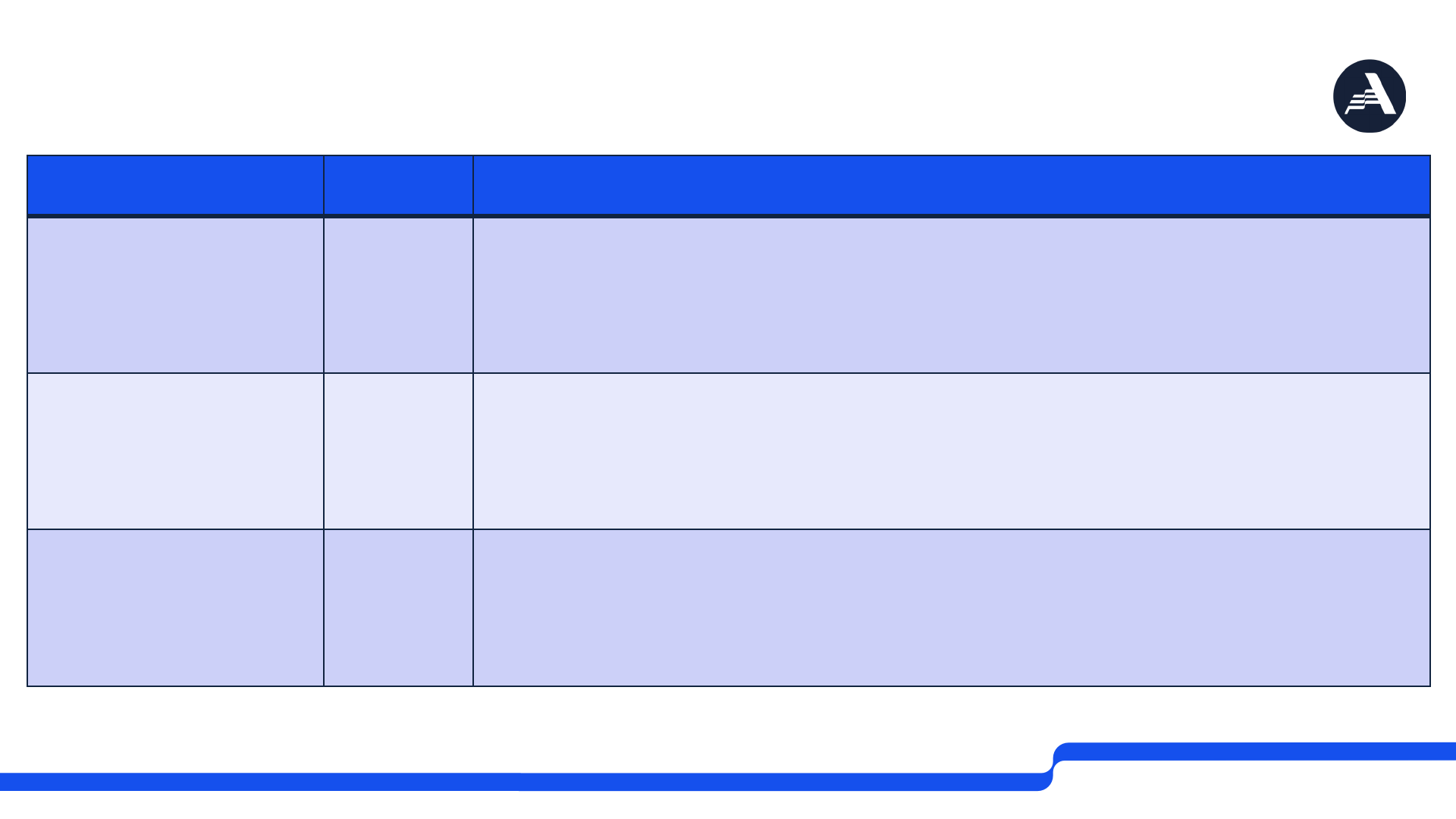

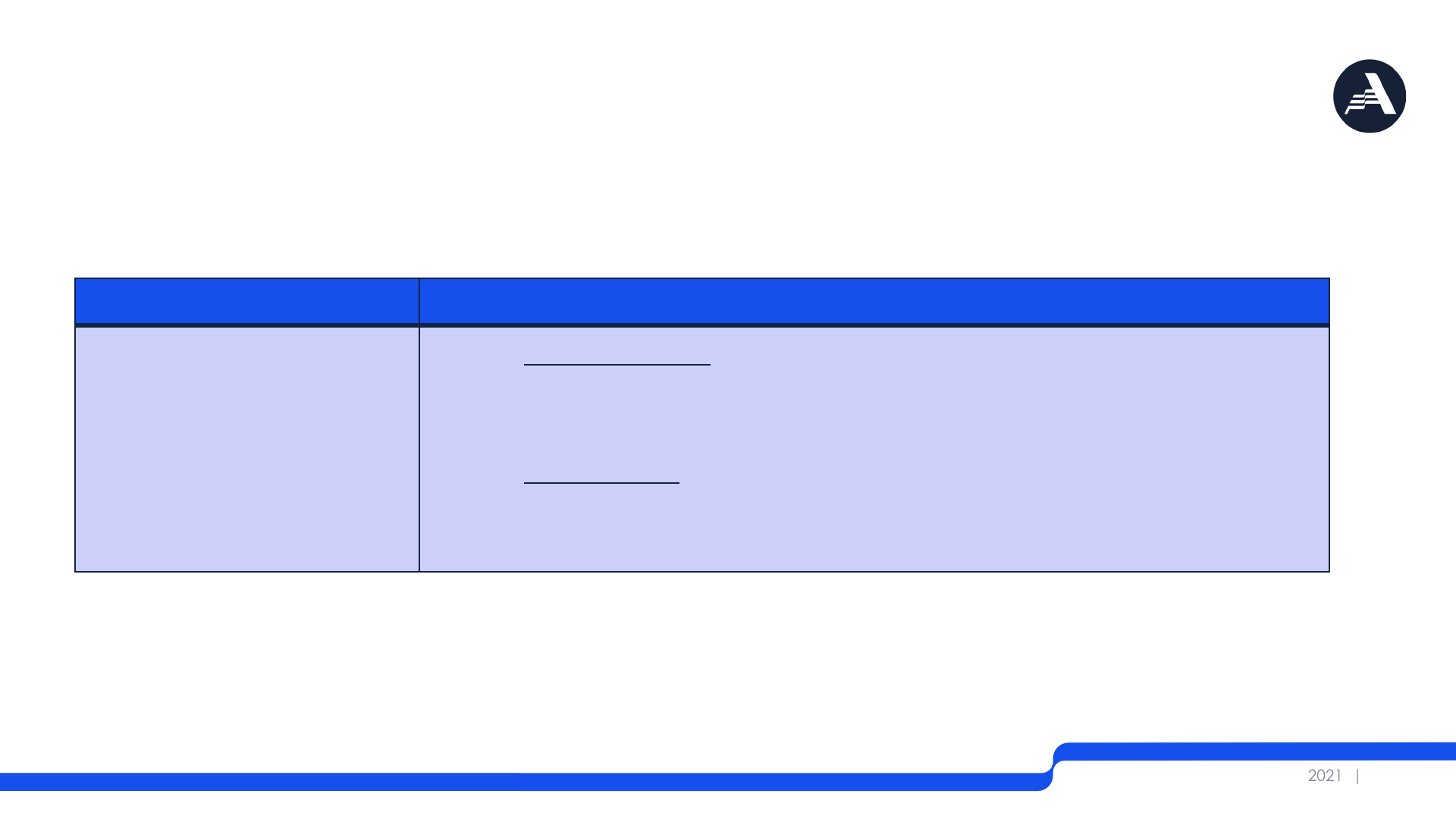

Small and Large Requirements for an Evaluation Plan

Small Grantees (<$500,000) Large Grantees (>$500,000)

When are grantees

required to conduct an

evaluation?

During 2

nd

competitive grant

cycle, and all following

competitive cycles.

During 2

nd

competitive grant

cycle, and all following

competitive cycles.

When must evaluation

plans be approved?

By the end of the first year of

the second grant cycle.

By the end of the first year of the

second grant cycle.

What evaluation design

is required?

Any design (e.g., process, non-

experimental outcomes).

Impact evaluation (experimental

or quasi-experimental).

What type of evaluator? Internal or external evaluator. External evaluator.

Alternative Evaluation Approach (AEA)

AEA Grantee Justification

Funding threshold Large - Grantees who receive an average of less than $1 million per

year can request to be exempt from the large grantee

requirements and conduct an internal non-impact evaluation.

Previous impact

evaluation

Large - Previously conducted an impact evaluation with

demonstrated evidence of effectiveness (i.e., Strong or

Moderate evidence).

AmeriCorps

National

Evaluation

Large or

Small

- Grantees participating in an AmeriCorps’ Office of Research

and Evaluation national evaluation (i.e., bundled evaluation or

Return on Investment) that will not be completed during

current grant cycle

- Large grantees can also request this if the national

evaluation’s design does not fulfill the requirements for a large

grantee.

Alternative Evaluation Approach (AEA) – continued

AEA Grantee Justification

Structure of

program or

grantee

organization

Large - Insurmountable challenges forming a comparison group.

- Significant changes to program design.

Replication Large - Implementing an evidence-based intervention with fidelity in

a new setting.

- A grantee’s application must be assessed at the Strong or

Moderate evidence level.

Timing Large or

Small

- Evaluation will not be completed by end of current grant

cycle.

- AEA approval required only if an interim evaluation report will

not meet evaluation requirements.

Key Components of a Plan

8

I. Theory of change

II. Scope of the evaluation

III. Evaluation outcome(s) of

interest

IV. Research questions

V. Evaluation design

VI. Sampling methods

VII. Data collection procedures,

data sources, and measurement

tools

VIII. Analysis plan

IX. Evaluator qualifications

X. Timeline

XI. Budget

What to include in the...

9

I. Theory of change

II. Scope of the evaluation

III. Evaluation outcome(s) of

interest

IV. Research questions

V. Evaluation design

VI. Sampling methods

VII. Data collection procedures,

data sources, and measurement

tools

VIII. Analysis plan

IX. Evaluator qualifications

X. Timeline

XI. Budget

• Describe how the activities

undertaken by your program

contribute to a chain of results

that lead to the intended

outcomes

• Your evaluation plan must align

with your theory of change

and your logic model

Theory of Change

elements

Program

context

Sequence of

required events

Underlying

assumptions

Logic

model

Short-term

outcomes

Intermediate

outcomes

Long-term

outcomes

I. Theory of Change

What to include on...

11

I. Theory of change

II. Scope of the evaluation

III. Evaluation outcome(s) of

interest

IV. Research questions

V. Evaluation design

VI. Sampling methods

VII. Data collection procedures,

data sources, and measurement

tools

VIII. Analysis plan

IX. Evaluator qualifications

X. Timeline

XI. Budget

• State the goal(s) of the evaluation and specify which program

activities will be assessed

• Programs are not expected to evaluate every component of

their logic model. Instead, the evaluation may focus on a sub-

set of program activities.

II. Scope of the Evaluation

12

What to include on...

13

I. Theory of change

II. Scope of the evaluation

III. Evaluation outcome(s) of

interest

IV. Research questions

V. Evaluation design

VI. Sampling methods

VII. Data collection procedures,

data sources, and measurement

tools

VIII. Analysis plan

IX. Evaluator qualifications

X. Timeline

XI. Budget

• Describe what outcomes your evaluation will measure

• Process / implementation outcomes or outputs

• Program beneficiary outcomes

• Member outcomes

• Your outcomes of interest should be:

• Part of your program’s theory of change and included in your logic

model

• Feasible for your program to measure given the source(s) of data

needed and level of effort required

III. Outcome(s) of Interest

14

What to include on...

15

I. Theory of change

II. Scope of the evaluation

III. Evaluation outcome(s) of

interest

IV. Research questions

V. Evaluation design

VI. Sampling methods

VII. Data collection procedures,

data sources, and measurement

tools

VIII. Analysis plan

IX. Evaluator qualifications

X. Timeline

XI. Budget

• One or more questions that define exactly what your

evaluation intends to accomplish

• The following are characteristics of a good research question:

• Clearly stated and specific

• Aligns with your theory of change / logic model

• Connect to the outcomes of interest

• Measurable and feasible to answer

• Aligns with your chosen evaluation design

IV. Research Questions

16

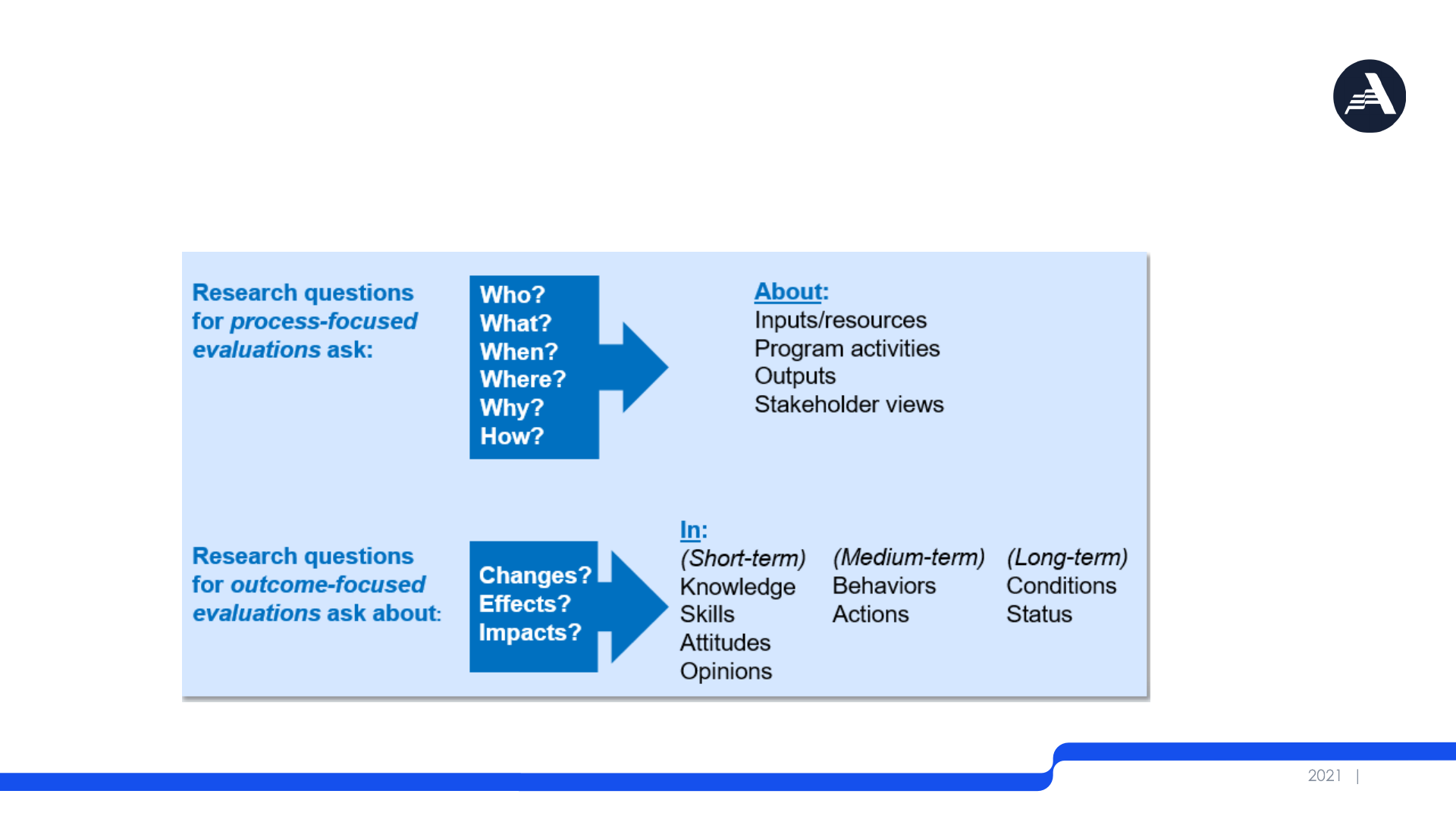

Research questions are worded differently depending on their

focus:

IV. Research Questions

17

What to include on...

18

I. Theory of change

II. Scope of the evaluation

III. Evaluation outcome(s) of

interest

IV. Research questions

V. Evaluation design

VI. Sampling methods

VII. Data collection procedures,

data sources, and measurement

tools

VIII. Analysis plan

IX. Evaluator qualifications

X. Timeline

XI. Budget

Description of general categories of evaluation designs:

V. Evaluation Design

19

Process Evaluation Outcome Evaluation Impact Evaluation

• Examines the extent to

which a program is

operating as intended by

assessing ongoing

program operations and

determining whether the

target population is being

served

• Results may be used to

determine what changes

and/or improvements

should be made to the

program’s operations

• Measures changes in

knowledge, attitude(s),

behavior(s) and/or

condition(s) among

program beneficiaries or

other stakeholder groups

• Results may demonstrate

what the program has

achieved

• Measures changes in

program beneficiaries

or stakeholders relative

to a reasonably similar

comparison/ control

group

• Results are an estimate

of the program’s

impact on beneficiaries

or other stakeholder

groups

V. AmeriCorps Approved Evaluation Designs

20

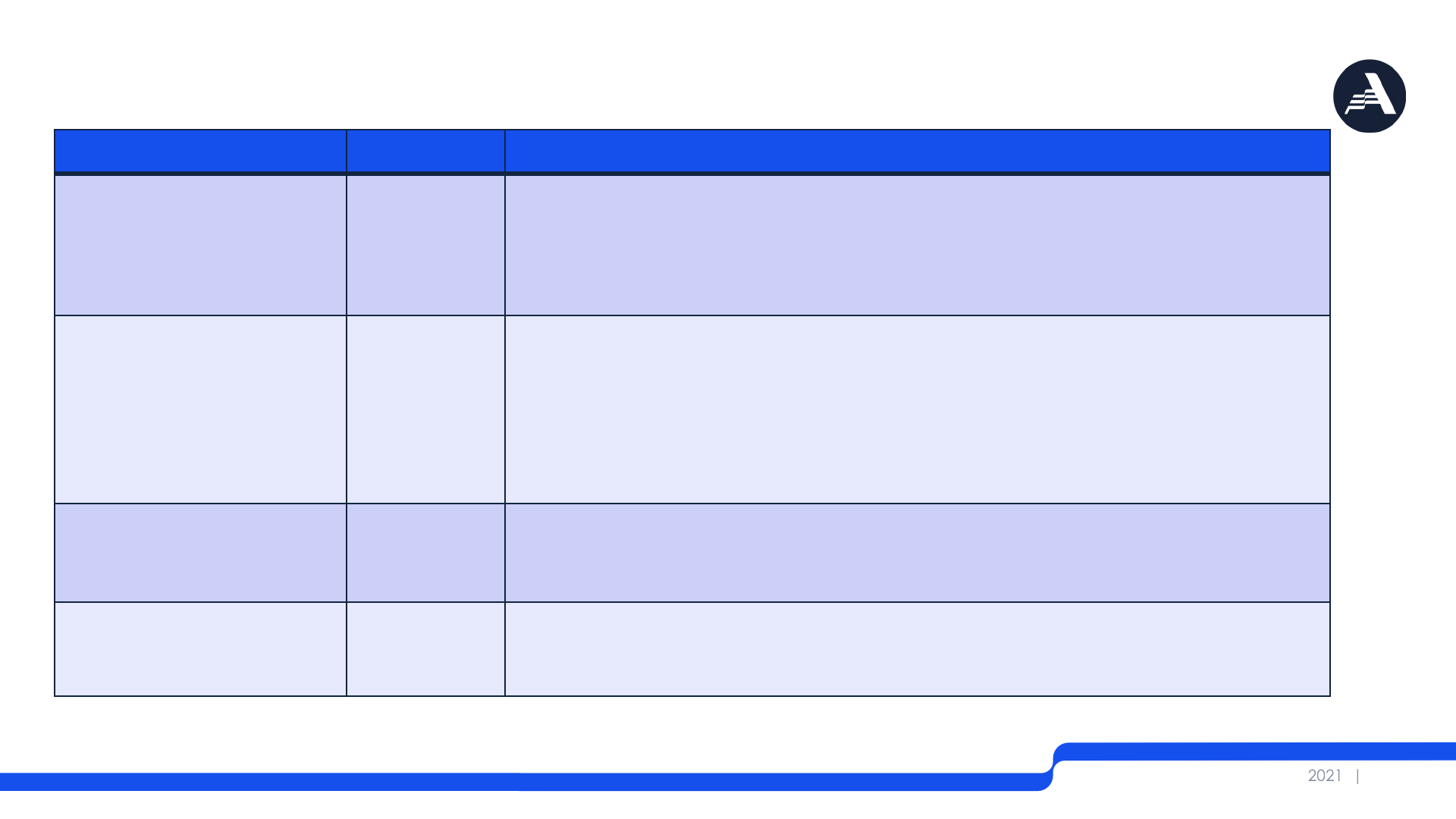

Type of Design Category Details needed on evaluation design

Experimental

design/Randomized

Controlled Trial

(RCT)

Impact • Description of the random assignment procedures that

will be used to form treatment and control groups

• Eligibility criteria for inclusion in the study

Quasi-experimental

Design (QED)

Impact • Description of the approach for identifying a reasonably

similar comparison group (e.g., propensity score

matching, difference in difference analysis)

• List of variables (covariates) to be used to statistically

equate treatment and comparison groups at baseline

Non-experimental

Design

Outcome • Description of whether pre- AND post-test measurements

OR post-only measurements will be used

Process Process • Description of the methods that will be used (i.e.,

qualitative only, quantitative only, or mixed methods)

What to include on...

21

I. Theory of change

II. Scope of the evaluation

III. Evaluation outcome(s) of

interest

IV. Research questions

V. Evaluation design

VI. Sampling methods

VII. Data collection procedures,

data sources, and measurement

tools

VIII. Analysis plan

IX. Evaluator qualifications

X. Timeline

XI. Budget

• For each data source, describe the sample or the target

population for the evaluation, including:

• Eligibility criteria that limits the sample or population (e.g., participation

level, site/location, age or grade level)

• Sampling procedures (e.g., random, purposeful, or convenience

sampling)

• Expected size of the sample or population

• Rationale or justification for sample sizes

• For ASN grantees, sample size justification is required for non-

experimental (i.e., rationale for sample selection) and impact

designs (i.e., a power analysis)

VI. Sampling Methods

22

• A power analysis is used to determine:

• How large a sample is needed to enable statistical judgments that are

accurate and reliable (i.e., required minimum sample size)

• How likely your statistical test will be to detect effects of a given size in a

particular situation

• It is recommended that a plan include the results of a power

analysis if:

• It is an impact evaluation design (i.e., RCT or QED)

• Your analysis involves statistical significance testing

VI. Sampling Methods

23

What to include on...

24

I. Theory of change

II. Scope of the evaluation

III. Evaluation outcome(s) of

interest

IV. Research questions

V. Evaluation design

VI. Sampling methods

VII. Data collection procedures,

data sources, and measurement

tools

VIII. Analysis plan

IX. Evaluator qualifications

X. Timeline

XI. Budget

• Provide a detailed description of the data that will be

collected or extracted to answer the research questions. This

section should address:

• Who/what will be the source of the data?

• What tools/instruments will be used to collect data?

• What is the plan for accessing administrative/extant data?

• What information will be collected/compiled?

• When and how often data will data be collected?

• Ensure that the data are adequate for addressing all of the

study's research questions

VII. Data Collection Procedures, Data Sources, and

Measurement Tools

25

What to include on...

26

I. Theory of change

II. Scope of the evaluation

III. Evaluation outcome(s) of

interest

IV. Research questions

V. Evaluation design

VI. Sampling methods

VII. Data collection procedures,

data sources, and measurement

tools

VIII. Analysis plan

IX. Evaluator qualifications

X. Timeline

XI. Budget

Explain how each data source will be analyzed to produce

findings that address the evaluation's research questions

VIII. Analysis Plan

27

Type of design Details needed on analysis

Non-experimental /

Process evaluation

design

• The quantitative

data analysis techniques that will be

used to produce the study findings (e.g., Chi-square, t-

tests, frequencies, means)

• The qualitative

data analysis techniques that will be used

to produce the study findings (e.g., content analysis,

thematic coding)

VIII. Analysis Plan

28

NOTE: Chi-square tests and t-tests are not adequate for conducting a QED

analysis. Instead, a multivariate regression model (e.g., ANOVA) is

preferred, so covariates (e.g., pre-test measures and other variables that

may affect the outcome of interest) can be controlled for in the analysis.

Type of design Details needed on analysis

Impact design

(RCT or QED)

• The statistical test/model that will be used to compare

outcomes for the treatment and comparison groups

• Plans to assess baseline equivalency of the treatment and

comparison groups and any statistical adjustments to be

used (if necessary)

What to include on...

29

I. Theory of change

II. Scope of the evaluation

III. Evaluation outcome(s) of

interest

IV. Research questions

V. Evaluation design

VI. Sampling methods

VII. Data collection procedures,

data sources, and measurement

tools

VIII. Analysis plan

IX. Evaluator qualifications

X. Timeline

XI. Budget

• Describe the evaluator(s) who will be carrying out the evaluation

activities, including

• Whether they are internal or external to the program;

• If using an external evaluator, the description clearly describes how they are

independent from the program (e.g., no conflicts of interest); and

• Qualifications for conducting the evaluation

• The type of evaluator used may also depend on the size of your

program

• Small grantees (<$500,000) can use an internal or external evaluator

• Large grantees (>$500,000) expected to use an external evaluator, unless a

Funding Threshold AEA is pursued

IX. Evaluator Qualifications

30

What to include on...

31

I. Theory of change

II. Scope of the evaluation

III. Evaluation outcome(s) of

interest

IV. Research questions

V. Evaluation design

VI. Sampling methods

VII. Data collection procedures,

data sources, and measurement

tools

VIII. Analysis plan

IX. Evaluator qualifications

X. Timeline

XI. Budget

• Provide a detailed timeline of when the major evaluation activities will

occur (e.g., evaluation planning, hire evaluator, develop instruments,

collect data, analyze data, write report)

• Helps determine if the evaluation is on track to be completed on time (i.e., before

the next GARP cycle)

• Specify the budget allotted for the evaluation

X-IX. Timeline and Budget

32

• Know what type of evaluation you must complete

• Small vs large grantee requirements

• AEA options available

• Fully describe each component of the plan

• See ASN Notice of Funding Opportunity (NOFO) and Frequently Asked

Questions: Evaluation for relevant grant cycle

• Review the Evaluation Plan template

• Ensure that your description of each of the components

aligns with one another (i.e., interrelated)

General Guidelines to Follow

33

• Your AmeriCorps Portfolio Manager or State Commission representative

• ASN – Evaluation Resources

https://www.americorps.gov/grantees-sponsors/evaluation-resources

• 2021 ASN Grantee Symposium presentation Demystifying Evaluation

Plans: https://www.youtube.com/watch?v=GJ0s9HL8Q2A

• AmeriCorps Evaluation TA Portal

https://americorpsevaluationta.norc.org

Internal Resources

34

• Resources for State and National Direct Grantees

https://americorps.gov/grantees-sponsors/directs-territories-

tribes?field_document_type_tax_target_id=19756#resources

• This page includes information about the following:

• AEA Guidance and Request Form

• Evaluation FAQs

• Evaluation Plan Template

• Evaluation Requirements

Internal Resources (continued)

35

Questions?

37

Carrie E. Markovitz, Ph.D.

NORC at the University of Chicago

markovitz[email protected]

To contact the Office of Research and

Evaluation: [email protected]

Thank you!